Global Shield Briefing (18 March 2025)

Sharing policy lessons, recognizing linkages across risk, and prioritizing preparedness – and a new team member

The latest policy, research and news on global catastrophic risk (GCR).

Why now?

Global catastrophic risk is seemingly rising faster than ever. Tipping points might be fast approaching. Not just climate disruptions, but also ecological collapse, severe bacterial and fungal infectious diseases, an intelligence explosion, poisoned information ecology, political polarization, economic fragility, tensions between nuclear-armed states, and breakdown of global institutions. Is it coincidence, is it perception, or is it connected?

We have a view. For once, we’ll leave it for you to ponder.

Sharing policy lessons

A new report introduces the concept of “Mutual Assured AI Malfunction (MAIM)”, which the authors believe is needed to deter any state’s aggressive bid for unilateral AI dominance. This strategy would consist of deterrence, non-proliferation and competitiveness, similar to the concept of mutual assured destruction (MAD) to deter nuclear conflict.

Two technology policy researchers claim that “A large-scale AI disaster could undermine public trust, stall innovation, and leave the United States trailing global competitors…If Washington fails to anticipate and mitigate major AI risks, the United States risks falling behind in the fallout from what could become AI’s Chernobyl moment.”

Policy comment: Putting aside specific AI policy for the moment – where there is no shortage of views on how to proceed – these recent analyses on AI risk demonstrate the value of viewing global catastrophic risk holistically. When seeing the various threats as not separate but related, policymakers can consider interconnections, similarities and shared characteristics. In the case of AI policy, lessons can be drawn by comparing it to other risk areas, particularly nuclear policy. AI as a technology is unprecedented. But the potential policy frameworks are not. As with nuclear weapons, policymakers will need to navigate the interplay between regulation and innovation, strategic stability between great powers, security from extremists and terrorists, monitoring and verification measures, institutional frameworks and international agreements, trade rules around critical parts and inputs, and planning for worst-case scenarios. This calls for policymakers to bring together the experts and advocates across various risk areas – including climate change, pandemics, space weather, food security – to develop and implement policies for catastrophic AI risk, and global catastrophic risk at large.

Recognizing linkages

Climate change’s impact on bird migratory patterns and nesting habits contributes to a complicated bird flu picture. As global average temperatures rise and extreme weather events change animal migration and movement, “animals are crossing paths in entirely new configurations, making it easier for them to swap diseases.” This causes both an increased risk of zoonotic disease transmission and a potential reduction of food supply due to severe contamination.

A new study looks at the impact of extreme weather events on viruses spreading from urban wastewater. Prolonged or intense rainstorms can result in rainwater overloading urban sewer systems, resulting in raw untreated sewage unreleased into rivers, lakes and coastal waters. The authors recommend developing targeted risk management strategies in the context of climate change and related natural disasters.

Greenhouse gases could result in fewer satellites that can sustainably operate in orbit, according to MIT researchers. Carbon dioxide and other greenhouse gases can cause the upper atmosphere to shrink. This contraction reduces the density of the thermosphere, where the International Space Station and most satellites orbit, and the “decreasing density reduces drag on debris objects and extends their lifetime in orbit, posing a persistent collision hazard to other satellites and risking the cascading generation of more debris.”

Policy comment: Climate change is not simply an environmental challenge. It is an infrastructure challenge, a food security challenge, a health challenge, an inflation challenge – even a space issue. By extension, climate policy requires more than reducing greenhouse gas emissions and developing green energy solutions. This is emblematic of other global catastrophic threats: AI does not only require AI policy; pandemics does not only require biosecurity; nuclear policy is not only for defense. Global catastrophic risk – and even mild versions of it – will impact every part of every government. Each agency must evaluate how global catastrophic risk will impact its mandate, its priorities, its ability to execute its mission, its workforce and its budget. A central coordinating office or task force – sitting in a national security council equivalent – could support these agencies in conducting this evaluation and providing a complete picture to senior leadership. Given the wider recognition of climate change as a global problem with catastrophic potential, this exercise could be piloted on catastrophic climate change.

Also see:

An overview of the impact of climate change on fungal infections by Wellcome, a charitable foundation investing over £50 million in fungal research.

Prioritizing preparedness

A post by the Centre for Long-Term Resilience (CLTR) in the UK highlights the need for urgent policy attention to be directed to AI incident preparedness, which they define as “thinking ahead about how AI could threaten national security or safety if prevention methods are insufficient or lacking, and how societies can effectively respond to these crises.” They identify a number of areas of focus: incident awareness, policy readiness, operational response, and resilience and societal hardening.

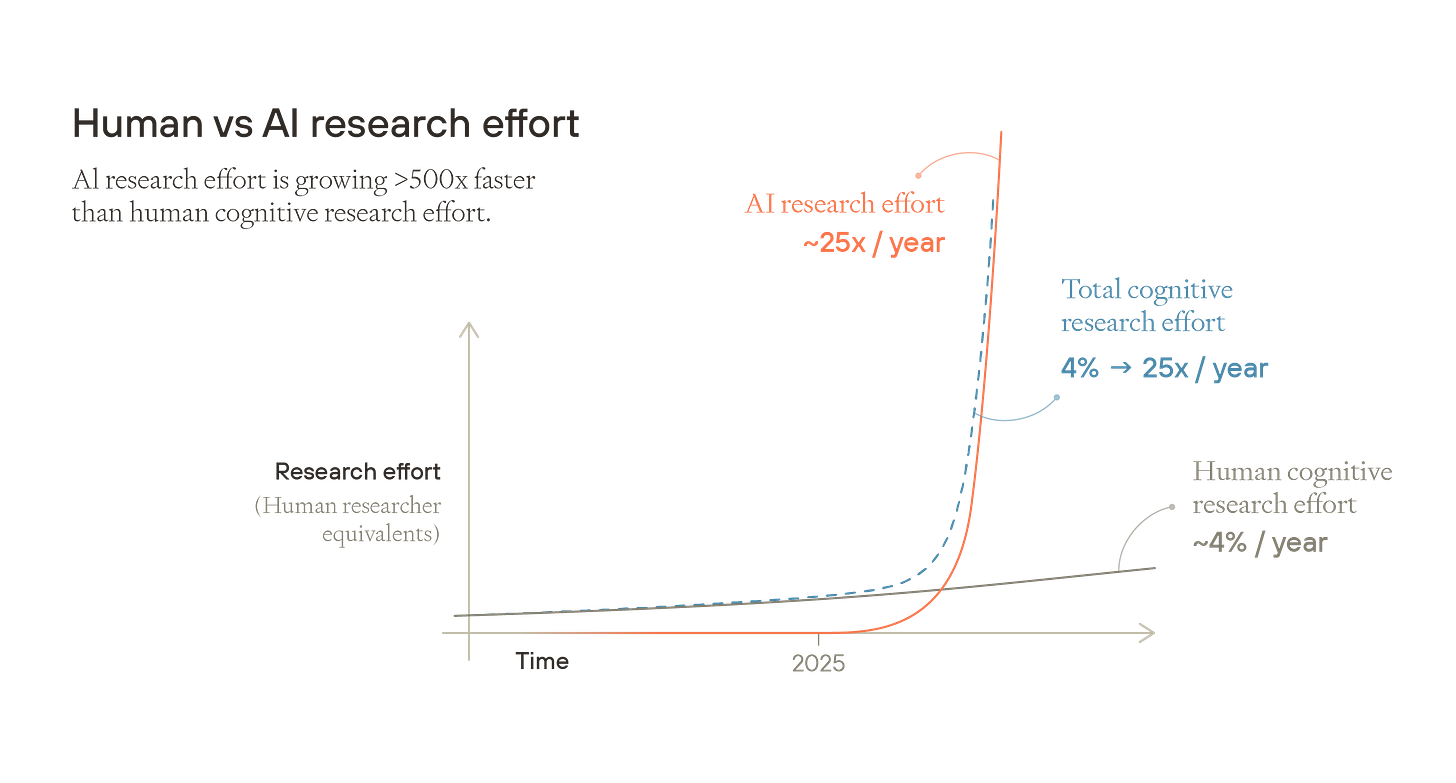

A new report by Forethought, “Preparing for the Intelligence Explosion”, looks at preparedness for artificial general intelligence, given its potential contribution to societal-scale challenges, including new weapons of mass destruction and AI-enabled autocracies. The report recommends improving decision-making for AI across society and governments. For the authors, “This strategy seems especially promising, because it addresses many grand challenges all at once, and even addresses “unknown unknown” challenges, which are particularly hard to tackle otherwise.”

Policy comment: The scale and speed of AI’s progress without sufficient safeguards is requiring a greater focus on preparedness. Researchers and policy advocates are recognizing that policies aimed at preventing an AI-enabled catastrophe should not be the only priority. As the CLTR post identifies, a number of policy areas must be considered. For example, governments must ensure they have the legislation that would allows them to respond effectively to crises if prevention fails, and update their incident response plans to address novel challenges posed by AI. More broadly, society must be made resilient. From a policy perspective, that could include protection of critical infrastructure, emergency management, and public warning and communication functions. An AI catastrophe – or any type for that matter – would severely test social cohesion, government response capacity, and ability to recover and rebuild.

Also see:

The third version of the General-Purpose AI Code of Practice released by the European Commission. It includes a new version of the safety and security section and continues to identify four core safety risks arising from general-purpose AI systems.

Welcoming our new US director

Please welcome Robert Levinson as Global Shield’s US Director. Rob will be leading Global Shield’s policy advocacy with the US government on all-hazards global catastrophic risk.

Prior to Global Shield, Rob was on Capitol Hill as the National Security Advisor to Senators Feinstein and Butler of California. Before that Rob worked in the defense industry and spent 10 years at Bloomberg Government as the Senior Defense Analyst. He also worked as a lobbyist and had a 20-year career in the U.S. Air Force as an intelligence officer, commander, and politico-military affairs officer.

He is a graduate of the Air Force Academy and has a master's degree from the University of California, San Diego in Latin American Studies and a master's degree in Global Risk from the Johns Hopkins School of Advanced International Studies in Bologna, Italy.

Connect with him on LinkedIn and go to our website if you’d like reach out directly.

This briefing is a product of Global Shield, the world’s first and only advocacy organization dedicated to reducing global catastrophic risk of all hazards. With each briefing, we aim to build the most knowledgeable audience in the world when it comes to reducing global catastrophic risk. We want to show that action is not only needed, it’s possible. Help us build this community of motivated individuals, researchers, advocates and policymakers by sharing this briefing with your networks.